Understanding How Google Lens Recognizes Objects in Images

Google Lens works like a helpful guide that looks at a photo and connects it to useful information. When you point your camera at something, it can recognize what is in front of you, read the text, and even identify a place you are visiting. It does this by combining careful image processing, trained AI models, and search systems that compare your photo to patterns it has learned. The result feels quick, but there are several clear steps happening in the background.

1. The Core Idea Behind Google Lens Recognition

Google Lens is built around a simple goal: turn pixels in a photo into meaning you can use. It first tries to understand what the camera captured, then it decides which kind of answer fits best, like an object label, extracted text, or a place name. After that, it connects what it found to results such as web pages, map listings, or actions like copying text. This flow stays similar even when the photo is very different, like a menu, a plant, or a monument.

1.1 From Photo Pixels to Useful Signals

A camera photo is a grid of color values, so the first job is to make it easier for a model to read. Lens can adjust for lighting, sharpen edges, and reduce noise so important details stand out. It also looks for clear regions, like a signboard area, a shoe outline, or a building shape.

After that, the image is converted into internal signals, often called features. Features are patterns like curves, corners, textures, and color relationships. These patterns are what the AI model can measure and compare.

1.2 On Device Steps vs Cloud Steps

Some parts of Lens can run on your phone, especially the early steps like finding text blocks or spotting the main object area. On-device work helps speed and can reduce how much data needs to be sent. It also helps Lens react quickly while you are still moving the camera.

Other steps may use cloud systems, especially when the request needs a large database comparison. Matching a landmark or finding visually similar items often benefits from big indexes stored on servers. The split depends on the phone, the connection, and the task.

1.3 The Models Behind Recognition

Lens relies on neural networks trained on huge sets of images and labels. A common approach uses convolutional neural networks or newer vision transformer models that learn what visual patterns usually mean. These models learn to pick up on both small details and bigger shapes.

The model does not “see” like a human, but it becomes very good at predicting what category fits a set of patterns. Over time, training improves accuracy for specific things such as pets, plants, food, and consumer products.

1.4 Embeddings and Visual Fingerprints

A key trick is turning the photo, or part of the photo, into a compact set of numbers called an embedding. You can think of an embedding as a visual fingerprint that captures the essence of what is shown. Two images of the same object, even from different angles, can end up with embeddings that are close.

This makes searching much faster because Lens can compare numbers instead of comparing full images. It can scan large collections and pull out the closest matches using similarity scoring.

1.5 Ranking Results So They Feel Right

Even when Lens finds several possible matches, it still needs to choose what to show first. Ranking systems weigh confidence scores, image quality, and how common the match is. For example, if a photo has a clear logo and a product outline, it may rank a brand match higher.

Lens also considers what kind of content people usually want from that situation. If it sees lots of text, it may prioritize copy and translate actions. If it sees a building facade, it may prioritize a place name and map listing.

1.6 Context Signals That Improve the Guess

Lens can use extra hints that come with the photo, like the camera angle, time, or general location settings if available. Even small context clues can help choose between similar options, like two landmarks with comparable shapes. When the model has a strong visual match, context plays a smaller role.

It also learns from aggregate usage patterns, like which result people tend to tap for certain kinds of images. That feedback helps improve future ranking without needing the model to change every day.

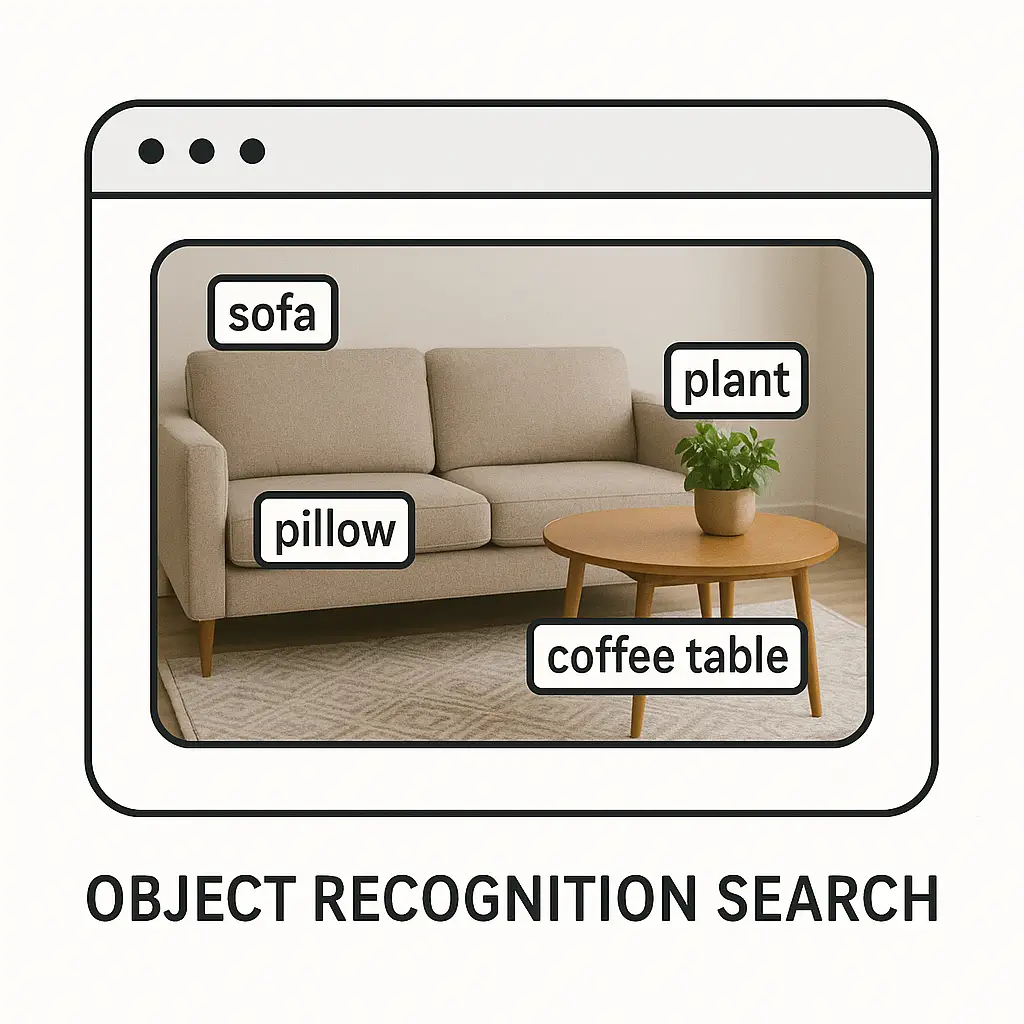

2. How Google Lens Identifies Everyday Objects

When Lens identifies objects, it usually follows a detect then classify approach. First it finds the region of interest, like the part of the photo that looks like a chair or a flower. Then it predicts what that region is most likely to be. In many cases, it also tries to go beyond a broad label and offer a more specific result you can act on.

2.1 Detecting the Main Object in the Frame

Object detection models look for boundaries and shapes that stand out from the background. They can propose boxes or outlines around items, sometimes multiple at once. This is why Lens can highlight more than one object, like a laptop and a phone on a table.

If the scene is cluttered, the model often focuses on the most prominent object first. Clear contrast, sharp focus, and a simple background usually help the detection step work better.

2.2 Classifying What the Object Is

Once a region is selected, the classifier predicts a category. This may start broad, like “shoe” or “chair,” then move to something narrower, like “running shoe” or “office chair.” The prediction is based on learned patterns such as shape, texture, and typical parts.

The model is trained on many examples, so it learns variation. A dog in bright sunlight and a dog indoors still share key patterns, so the model can generalize. This is why Lens can still work even when your photo is not perfect.

2.3 Fine-Grained Recognition for Similar Items

Some categories are easy, while others need fine-grained recognition. For example, many plants look alike, and many car models share similar fronts. Fine-grained models focus on subtle differences such as leaf edges, petal shapes, grill patterns, or logo placement.

Lens may combine a general model with specialized models for certain domains. That is why results for plants, animals, and food often look more detailed than a simple object label.

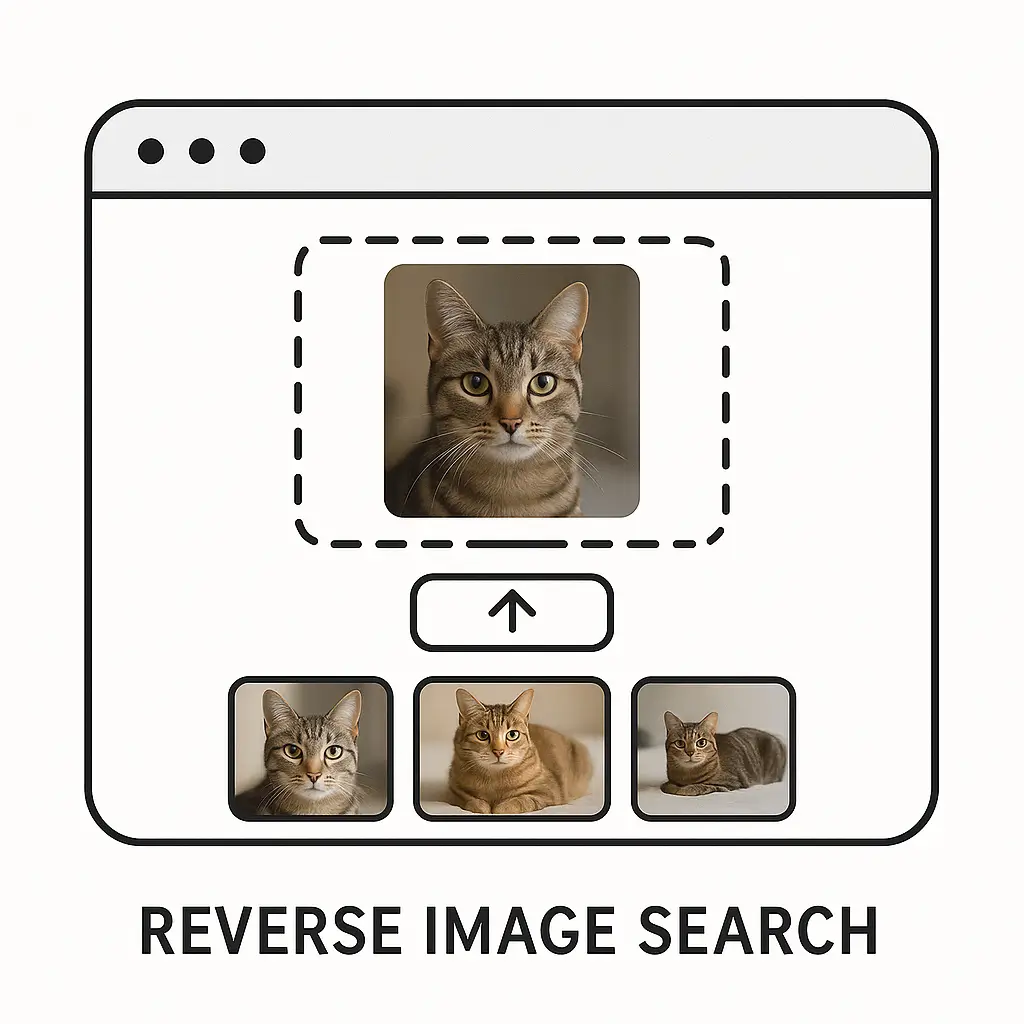

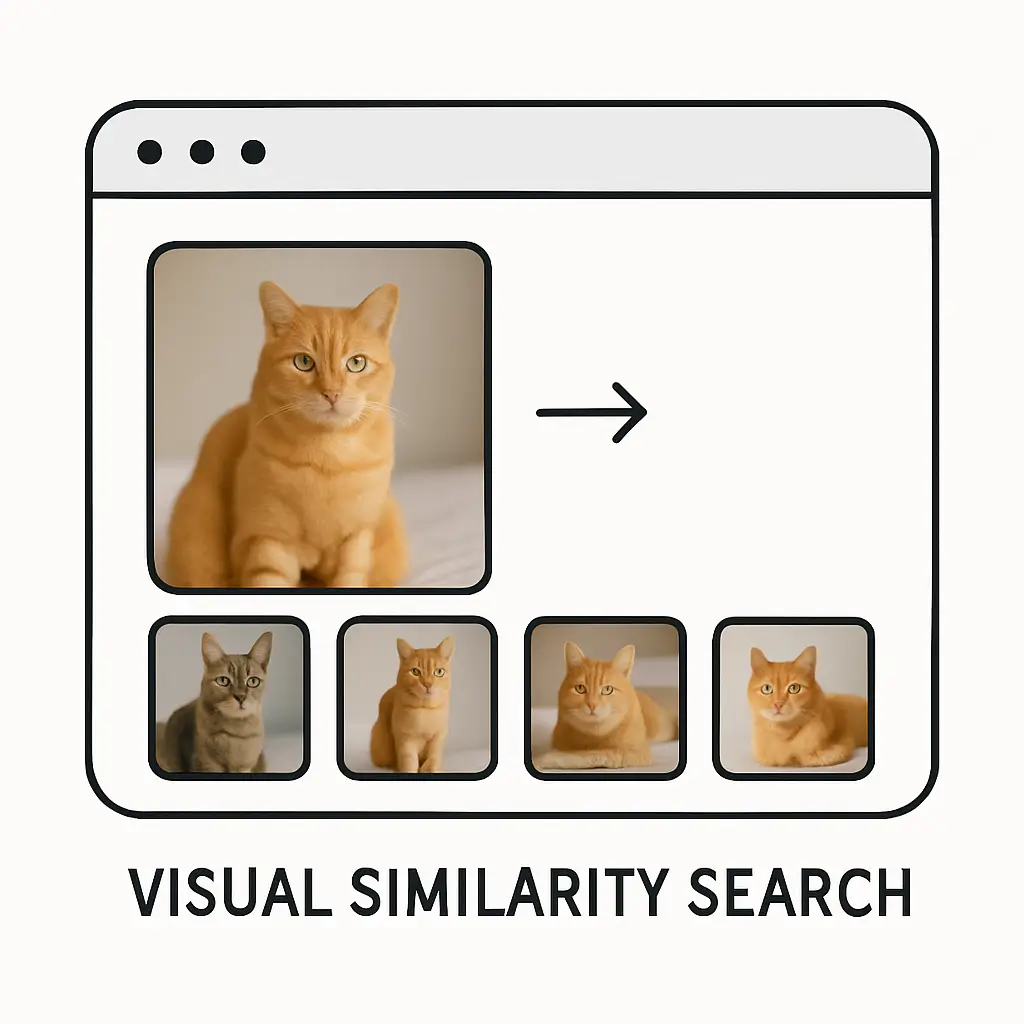

2.4 Matching Visually Similar Products and Items

When Lens tries to find “similar” items, it leans heavily on embeddings and similarity search. Instead of asking “what is this,” it asks “what looks most like this.” That is helpful for furniture, clothing, accessories, and decor where style matters.

If you take a photo of a patterned lamp, Lens might return lamps with a matching silhouette and shade texture. The match is not only about brand, but also about visual shape and design cues.

2.5 Using Surrounding Clues to Improve Object Results

Objects do not exist alone, so Lens also reads the scene context. A round thing in a kitchen may be treated differently than a round thing in a sports field. Background patterns can help the model avoid odd guesses that do not fit the setting.

Text near an object can also help a lot. A label on a bottle, a brand name on packaging, or a model number on a sticker can push the result from “electronics” to an exact product line.

2.6 Why Object Recognition Can Vary

Object recognition depends on image quality and viewpoint. Blurry photos, heavy shadows, and extreme angles can reduce the model’s confidence. Objects that are partially hidden or unusually shaped can also lead to broader guesses.

Sometimes the object is too new or too rare to match well. In those cases, Google may still support object recognition search by showing helpful general categories or visually similar items, even if it cannot name the exact model.

3. How Google Lens Reads Text and Understands It

Text recognition is one of the most practical parts of Lens because it turns signs, pages, labels, and screens into usable text. It usually begins by finding where text is, then converting those pixels into characters, and finally offering actions like copy, search, or translate. The pipeline is designed to work on many fonts and many real-life lighting conditions.

3.1 Detecting Text Regions in a Photo

Before reading text, Lens must locate it. Text detection models look for repeating strokes, consistent spacing, and alignment that resembles lines of writing. They identify blocks of text even if they are tilted, curved, or printed on textured surfaces.

This is why Lens can pick up text on a menu board, a book page, or product packaging. It tries to isolate text areas from the background so that the reading step has cleaner input.

3.2 OCR Converts Images into Characters

OCR, or optical character recognition, is the step that turns the detected text image into actual letters and numbers. Modern OCR uses neural networks that learn character shapes and how characters connect in words. It can handle multiple fonts, different sizes, and common distortions.

If a photo is slightly angled, Lens can apply perspective correction. It can also sharpen the text area so letters become clearer. These small adjustments make OCR output more accurate and easier to use.

3.3 Language Detection and Script Understanding

Lens often needs to know which language and script it is dealing with. The same shapes can be interpreted differently across scripts, so language detection helps avoid incorrect character guesses. It can spot whether a line looks like Hindi, English, Arabic, Japanese, or another script family.

After language detection, the OCR model can apply the right reading rules. This improves accuracy for characters that look similar across languages but behave differently in words.

3.4 Translation Overlay and What It Requires

When you use translation, Lens does more than swap words. It first extracts the text, translates it, and then tries to place the translated text back into the image in a readable way. This involves estimating the original text layout, line breaks, and font size so the overlay looks natural.

Many people also use the Google Translate app alongside Lens-style camera translation, especially when traveling. It can be handy for menus, street signs, and quick instructions, because it keeps you focused on the real object instead of switching back and forth.

3.5 Copy, Search, and Actions on Recognized Text

Once Lens extracts text from an image, it can suggest practical actions based on what it finds. You may see options to copy or share the text, search it online, or open related links. If the text includes an address, Lens can suggest viewing it in maps. When it detects a phone number, it may offer the option to call, and if an email address is recognized, it can help start a new message.

A practical workflow is copying text from a paper into Google Docs for editing, especially when you have short notes or a printed paragraph. It saves time when retyping is not needed and you just want the text in a clean format.

3.6 Handwriting, Screens, and Tricky Surfaces

Handwriting is harder than printed text because letter shapes vary from person to person. Lens can still read many handwriting styles, especially when writing is clear and spaced. It works best when the background is plain and the ink contrast is strong.

Screens can also be tricky due to glare and moire patterns. Lens may reduce reflection effects and sharpen the text region. If glare is heavy, changing the angle slightly often helps Lens capture more readable letters.

4. How Google Lens Recognizes Landmarks and Places

Landmark recognition is part visual matching and part knowledge mapping. Lens looks for distinctive structures, patterns, and shapes that often define a location. Then it compares those features to known place images and map data so it can suggest the name, history, or visitor information. This is why a single building photo can quickly turn into a rich set of place details.

4.1 Visual Feature Matching for Famous Structures

A landmark photo is full of edges, corners, and repeating patterns, like arches, columns, and window grids. Lens extracts strong features that are stable even when the photo is taken from different angles. These features are compared against a large set of known landmark features.

This comparison is similar to how general image matching works, but tuned for buildings and scenes. In the middle of this process, approaches often overlap with Image Search Techniques, because both rely on feature similarity and ranking of close matches.

4.2 Using Location Hints When Available

If location signals are available, they can narrow the search. A photo taken in Delhi is more likely to match a monument in that region than one in another country. Even a rough area estimate can cut down the number of candidates.

This does not mean Lens must know your exact location to work. A very distinctive landmark can be recognized from visuals alone. Location hints simply help when many places look similar or when the image is less clear.

4.3 Understanding Architecture and Regional Style

Landmarks often have style cues tied to a culture or time period, like domes, spires, carvings, or building materials. Lens models can learn these patterns and use them as clues. This helps it suggest a region or a style even when it cannot confidently name the exact landmark.

It can also distinguish natural landmarks such as mountains, waterfalls, and coastlines by learning terrain and horizon patterns. In those cases, it may lean on map-based knowledge and common viewpoints from public photos.

4.4 Handling Night Photos and Weather Conditions

Night photos change colors and reduce detail, which can confuse recognition. Lens may use brighter edges and shape outlines more than textures. Long exposure blur can soften patterns, so the model focuses on strong geometry like the outline of a tower or bridge.

Weather can also hide details, like fog or rain. In those cases, Lens may still propose likely matches based on partial cues. A better result often comes from taking a second photo with a slightly different angle or closer framing.

4.5 Similar Landmarks and Disambiguation

Some landmarks share similar architecture, like multiple cathedrals or multiple forts built in a related style. Lens can return a small set of candidates rather than one confident answer. It may also show nearby options, especially if you are close to a cluster of known sites.

This is where extra context helps, like street signs in the photo or a recognizable nearby structure. Even a small piece of readable text near the landmark can push the match toward the right place.

4.6 Feedback Loops That Improve Place Results

When people tap a result, open a map listing, or refine a query, that interaction can help improve future performance at scale. Over time, common landmark photos become easier to recognize because the system has more examples of how people capture them. New viewpoints, like unusual angles, become part of the learned variation.

If Lens gives multiple options, choosing the correct one is also a quiet form of feedback. It helps the system understand which visual cues mattered and which ones were less useful for that type of place.

6. Privacy, Safety, and Data Handling in Google Lens

Using a camera to understand the world naturally raises privacy concerns for many users. Google Lens is designed with safeguards that aim to reduce unnecessary data use while still delivering helpful results. The focus stays on interpreting images rather than collecting or profiling personal information.

Privacy considerations influence how images are processed, stored, and removed after use. Clear boundaries help ensure the tool remains useful without crossing into sensitive or inappropriate areas. These design choices are meant to balance convenience with responsible data handling.

6.1 What Happens to Photos You Scan

When an image is scanned, it may be processed directly on the device or securely analyzed elsewhere depending on the task. The purpose of this processing is to extract useful information, not to store photos permanently. Many scans are handled briefly and then discarded.

Users can review activity settings and manage stored interactions if they choose. This visibility allows people to understand how their data is used. Control options help users decide what they are comfortable keeping or removing.

6.2 On Device Processing for Sensitive Tasks

On-device processing is used whenever possible, especially for tasks like detecting text or outlining objects. Keeping work on the device reduces data transfer and improves response time. It also limits exposure of personal images.

As smartphones become more capable, more features can run locally. This trend supports better privacy while maintaining strong performance. It also helps Lens feel faster and more responsive in everyday use.

6.3 Avoiding Recognition of Sensitive Attributes

Google Lens avoids identifying sensitive personal attributes from images. It does not attempt to infer personal traits, identity, or private details based on appearance. The system focuses strictly on objects, text, and locations.

When people appear in photos, Lens usually ignores them unless the user clearly targets something like clothing or an accessory. This boundary reduces misuse and keeps recognition focused on safe, practical tasks.

6.4 Handling Faces and Personal Content

Lens does not identify individuals by name or link faces to identities. While it may detect the presence of a face, it does not label who the person is. This prevents casual photos from becoming personal identifiers.

For personal documents such as mail or IDs, text recognition happens only when the user chooses. Sensitive information is not automatically highlighted or shared. Control remains firmly with the user.

6.5 User Controls and Transparency

Users have access to settings that allow them to manage Lens-related activity. Past interactions can be reviewed, cleared, or limited based on personal preference. Clear explanations help users understand available options.

Transparency builds confidence in how the tool works. When people understand data handling clearly, they can use the feature comfortably. This openness supports trust and responsible use.

6.6 Limits and Responsible Use

No recognition system is perfect, and Google Lens includes built-in limits. In sensitive cases, it may provide only general information or avoid recognition entirely. These limits help reduce harm and incorrect assumptions.

Responsible use also depends on users. Understanding that results are informational rather than definitive helps maintain realistic expectations. Lens is designed to assist, not replace human judgment.

7. Real World Examples of Google Lens in Daily Use

Google Lens becomes easier to understand when viewed through everyday situations. The same recognition systems support tasks such as studying, shopping, traveling, and organizing information. These examples show how visual understanding fits naturally into daily routines.

Each use case combines object recognition, text extraction, or place identification. The goal is to solve small, real problems quickly and smoothly. Lens works best when it supports simple decisions without interrupting normal activity.

7.1 Learning From Books and Printed Pages

Students often scan textbook pages, notes, or printed handouts to extract text for further use. This makes it easier to search explanations online, translate content into another language, or save information digitally for later reference. It is especially helpful when working with printed material that cannot be edited directly.

This approach reduces manual effort and saves a significant amount of time. Even a short scan can turn printed content into clean, editable text that can be organized across apps and devices. The result is smoother studying, better note management, and faster access to information when needed.

7.2 Understanding Products While Shopping

A photo of a product can reveal its general type, category, or visually similar items. This helps when you come across something unfamiliar and want to understand what it is or how it is usually used. Visual matches provide a clear starting point for learning more.

People often combine this with basic web searches to gather additional context. The intention is usually to compare features, understand purpose, or learn background information. It supports informed decisions without creating pressure to buy or act immediately.

7.3 Travel and Exploring New Places

While traveling, Lens can identify landmarks, read street signs, and translate menus into a familiar language. This reduces confusion and helps people feel more confident when navigating unfamiliar environments. Simple questions can be answered without interrupting the experience.

Maps and place information add useful background and orientation. Casual exploration becomes more informative without requiring detailed planning in advance. Travelers can focus on enjoying their surroundings instead of constantly searching for information.

7.4 Organizing Information From the Environment

Lens can capture details from business cards, posters, flyers, or public notices. Recognized information such as phone numbers, addresses, or dates can be saved or used immediately. This prevents important details from being forgotten or lost.

Physical information becomes easier to manage in digital form. This is especially helpful in work settings, conferences, or events where information appears briefly. Quick capture supports better organization and timely follow-up.

7.5 Helping With Learning and Curiosity

Many people use Lens out of curiosity rather than necessity. Scanning a plant, animal, or everyday object can lead to new knowledge and spark interest. This encourages learning through observation.

This type of exploration fits naturally into daily life. It requires no setup, planning, or formal goal. Curiosity can be satisfied in moments, making learning feel effortless and enjoyable.

7.6 When Results Are Partial but Still Helpful

Sometimes Lens cannot provide an exact or complete match, especially for rare or unclear subjects. Even in these cases, related categories or similar examples can guide the next step. Partial results still reduce uncertainty and confusion.

Seeing Lens as an assistant rather than a final authority helps manage expectations. It offers direction and context instead of absolute answers. This supports exploration, learning, and informed decision-making.

8. Future Directions for Visual Understanding Tools

Visual understanding tools continue to improve as models, data, and hardware develop together. While the basic idea behind tools like Google Lens stays the same, the experience becomes smoother and more reliable. Over time, improvements focus on accuracy, speed, and clarity rather than adding unnecessary complexity.

Future progress aims to reduce user effort while increasing confidence in results. The goal is to make visual recognition feel natural and dependable in everyday situations. Small refinements across many areas slowly shape a better overall experience.

8.1 Better Accuracy With Fewer Clues

Future models are designed to work well even when images are blurry, poorly lit, or taken quickly. Stronger learning allows the system to recognize objects and text with fewer visual signals. This makes the tool more forgiving in real-world conditions.

As accuracy improves, users will not need to carefully frame photos or take repeated attempts. Casual images taken in motion or low light can still produce useful results. This reduces effort and makes recognition more accessible.

8.2 Deeper Understanding Beyond Labels

Visual recognition may move beyond simply naming objects toward understanding how they are typically used. For example, recognizing a tool and also identifying its common purpose or environment. This adds meaning without overwhelming the user.

To support this, models combine visual patterns with broader knowledge. The challenge is delivering insights in a clear and simple way. Helpful context matters more than technical detail.

8.3 Smoother Real Time Interaction

Real-time interaction continues to improve as processing becomes faster. Immediate highlights and suggestions can appear while the camera moves. This creates a more fluid experience.

The tool feels less like a scanner and more like a guide. Users can explore surroundings naturally without stopping. Smooth feedback supports curiosity and ease of use.

8.4 Expanding Support for Languages and Regions

Expanding support for languages and regions increases usefulness around the world. This requires collecting regional data and understanding local visual patterns. Recognition becomes more inclusive.

Local signs, products, and landmarks are easier to identify as coverage grows. This helps users in less documented areas. Broader support strengthens everyday reliability.

8.5 Clearer Explanations of Results

Clear explanations help users understand why a result appears. Highlighting matching areas builds trust in the system’s decisions. Transparency reduces confusion.

Understanding results also helps users take better photos next time. This improves accuracy naturally. Learning happens on both sides.

8.6 Keeping the Focus on Helpfulness

As features expand, staying helpful matters more than adding options. Tools should solve real problems without distraction. Simplicity supports confidence.

Google Lens works best when it quietly assists the user. It turns what is seen into useful information. The experience remains supportive and unobtrusive.